Dogukan Tuna

Physics, materials research and development; accelerated by continual inference engine at SM-1 | Supermatter — early stage, heavy development

Multi-agent system infrastructure at Manuel AI — HVAC, energy, marine

I think of AI as the ultimate compressor for the hardest problems — and a genuine superpower for accelerated science. It's time to build, from bits to atoms. That's what I spend my time on: continual learning, multi-agent systems, reinforcement learning infrastructure for LLMs, high-compute RL, megakernels and large-scale retrieval. Trying to find AGI-level questions to ask. My core focus is RSI scaling through RL compute. Tinkering about how AI models could perform complex, difficult, long-horizon agentic tasks in ultra-realistic settings.

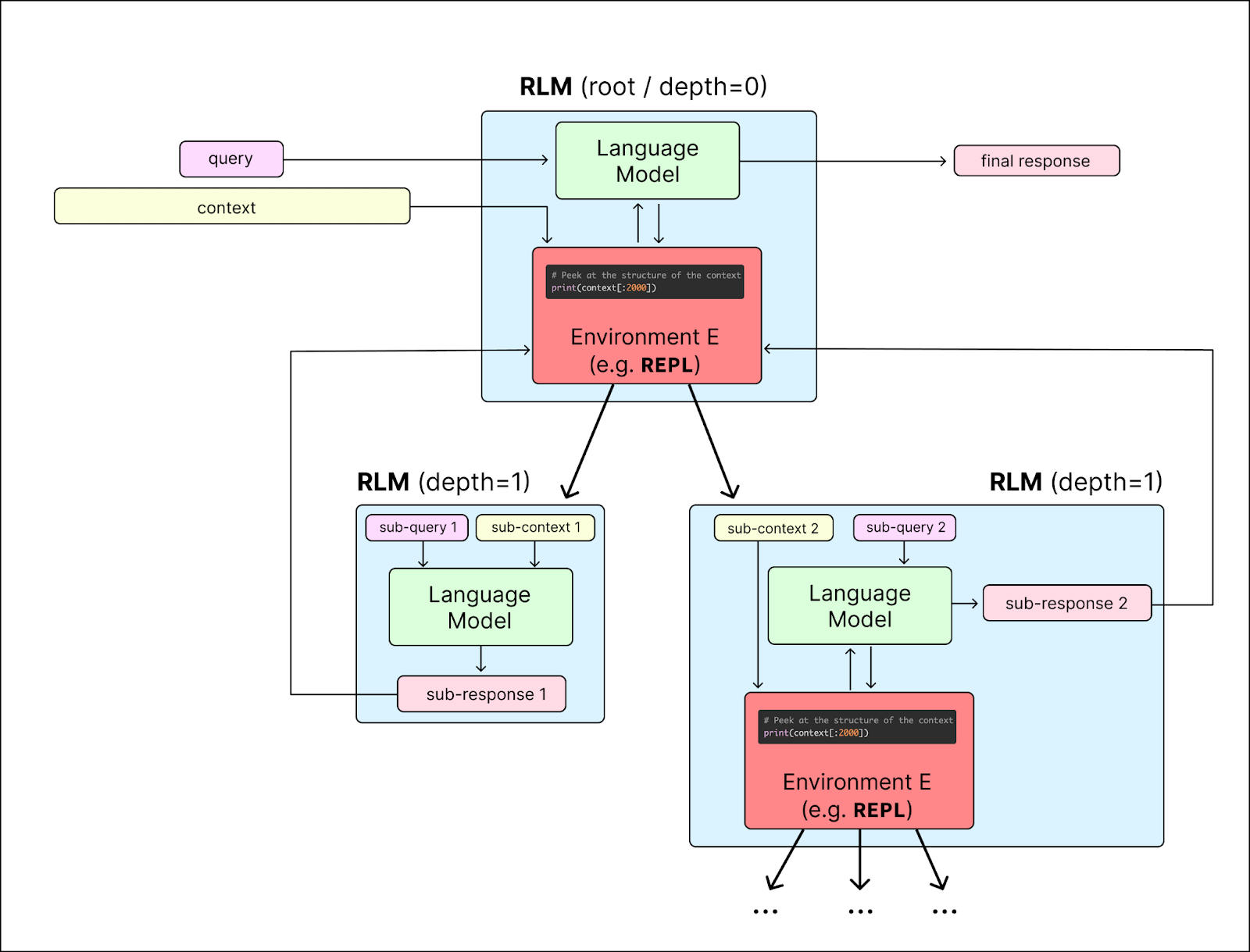

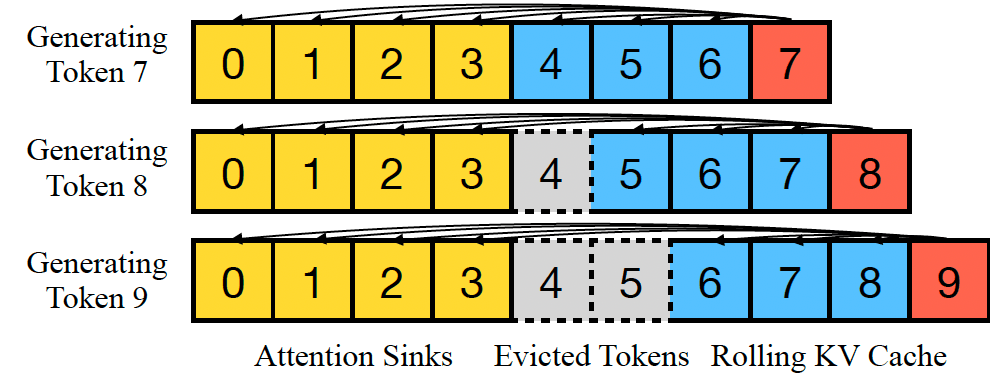

Mem-RLM — Memory-Augmented Inference for Recursive Language Models

LLM inference, memory augmentation, recursive language models, reinforcement learning, AI agents, open source, test-time compute, strategy learning

An open-source memory layer for Recursive Language Models that records execution trajectories, extracts reusable strategies, and injects them into future runs. Models stop starting cold and actually learn which approaches work for which problem types — 26% accuracy improvement on weaker models, fully stateful inference.

ContextJira — AI-Native Context Extraction from Jira

developer tools, Chrome Extension, Jira, AI workflow, LLM, productivity, open source, AI developer tools

A Chrome Extension that turns any Jira issue into structured Markdown you can paste straight into Claude, ChatGPT, or any LLM. One click, full context — no manual reformatting, no lost details.

Teaching agents GPU/TPU/QPU compute: An open skill catalog

GPU programming, TPU, QPU, quantum computing, HPC, CUDA, agent skills, open source, accelerated computing

An open-source, growing catalog of structured agent skills for GPU/TPU/QPU-accelerated frameworks and simulators. The goal: cover every mature and experimental HPC compute workload so any agent can pick up working knowledge at inference time.

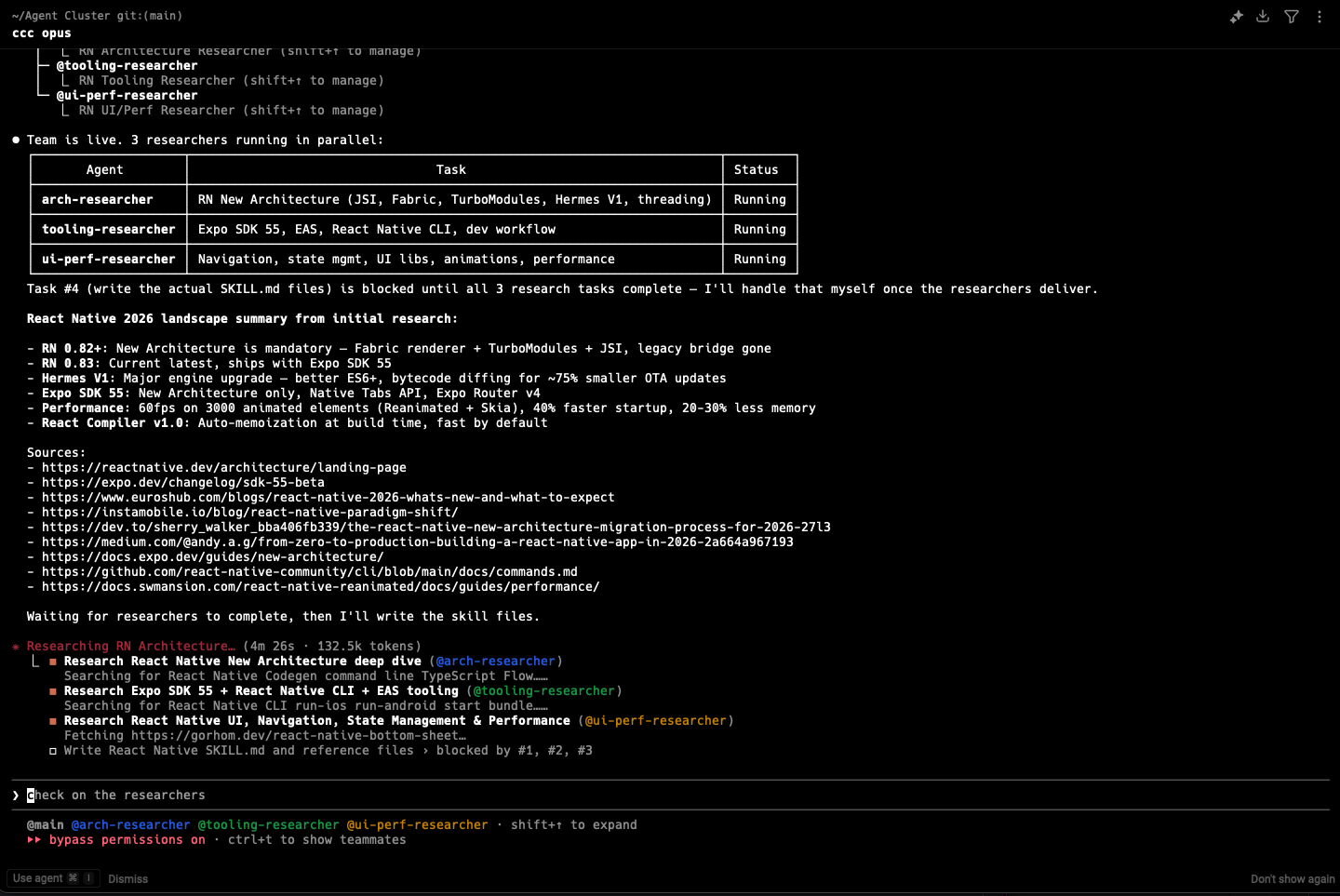

Claude Code-Time Skill Acquisition with Agent Teams

AI agents, Claude Code, multi-agent systems, skill acquisition, agent coordination, knowledge base, React Native

A team of agents researched, synthesized, and integrated a production-grade React Native skill into a shared knowledge base in under 15 minutes — just through coordination at Claude Code-time.

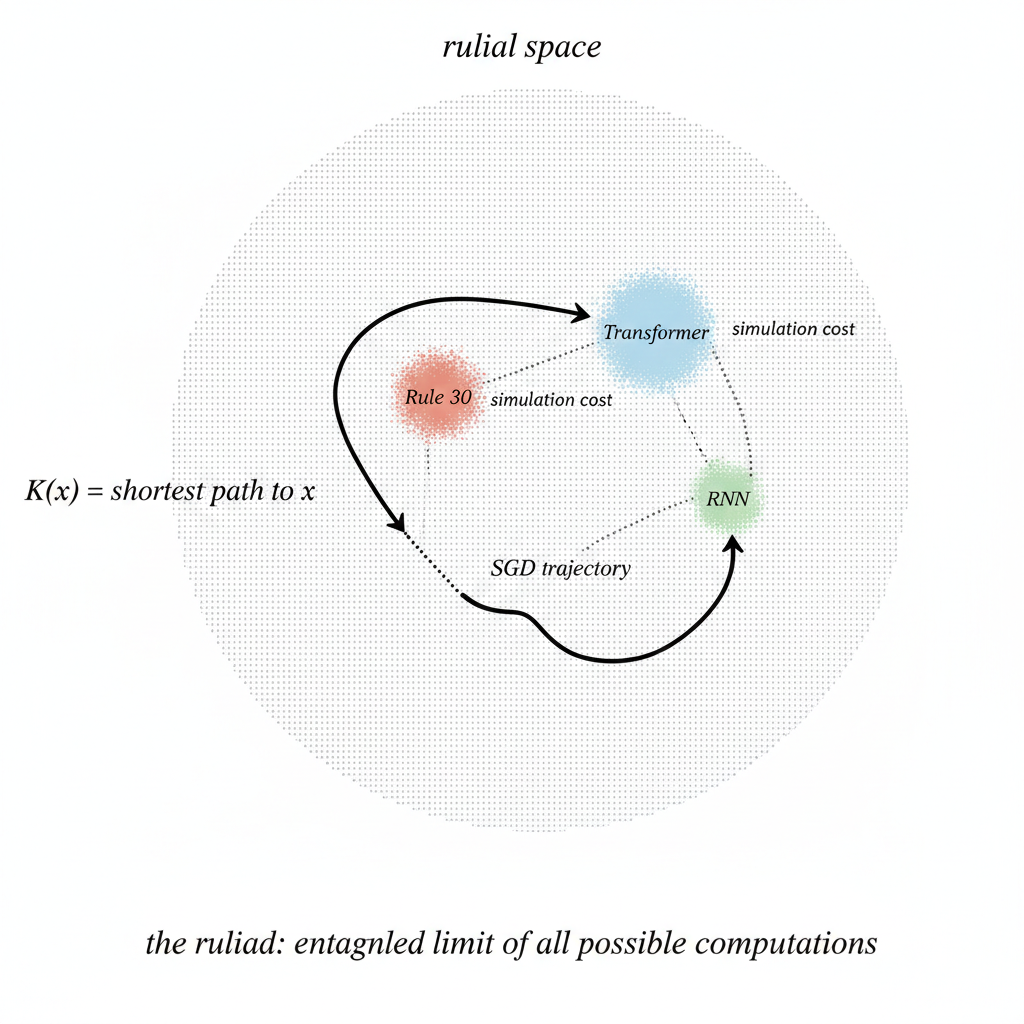

On Compression, Computation and the Space Between

Kolmogorov complexity, information theory, computation theory, neural networks, Wolfram, compression, mathematics

Kolmogorov complexity, neural networks as program search and Wolfram's ruliology seem to be looking at the same thing from different rooms.

Defeating Nondeterminism in LLM Inference: Reproducing Batch-Invariant Ops (RMSNorm & Tiled Matrix Multiplication) in JAX

JAX, GPU kernels, RMSNorm, matrix multiplication, LLM inference, nondeterminism, batch invariance, deep learning

This learning log is my beginning of a series exploring various kernel-related topics. As a starting point, I will reproduce the implementation of batch-invariant NN operations in JAX, drawing from Thinking Machines Lab's seminal collaborative work, \"Defeating Nondeterminism in LLM Inference.\"

Streaming deepagents and task delegation with real-time output

LLM agents, streaming, deep agents, multi-agent systems, task delegation, Python, AI engineering

This post demonstrates how to implement streaming capabilities on top of DeepAgents' package with multi-agent setup, with practical code examples and architectural patterns you can apply to your own projects.

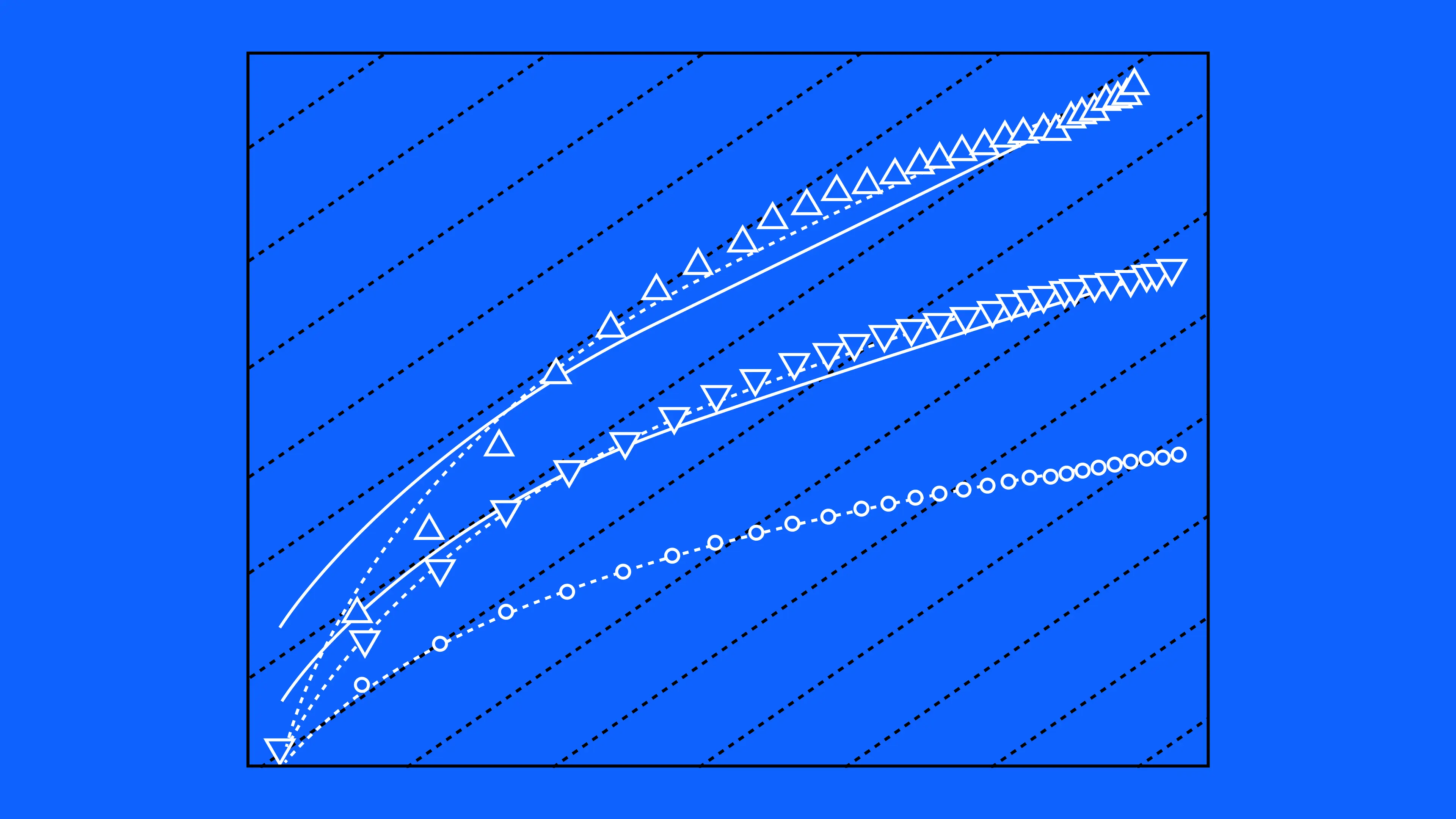

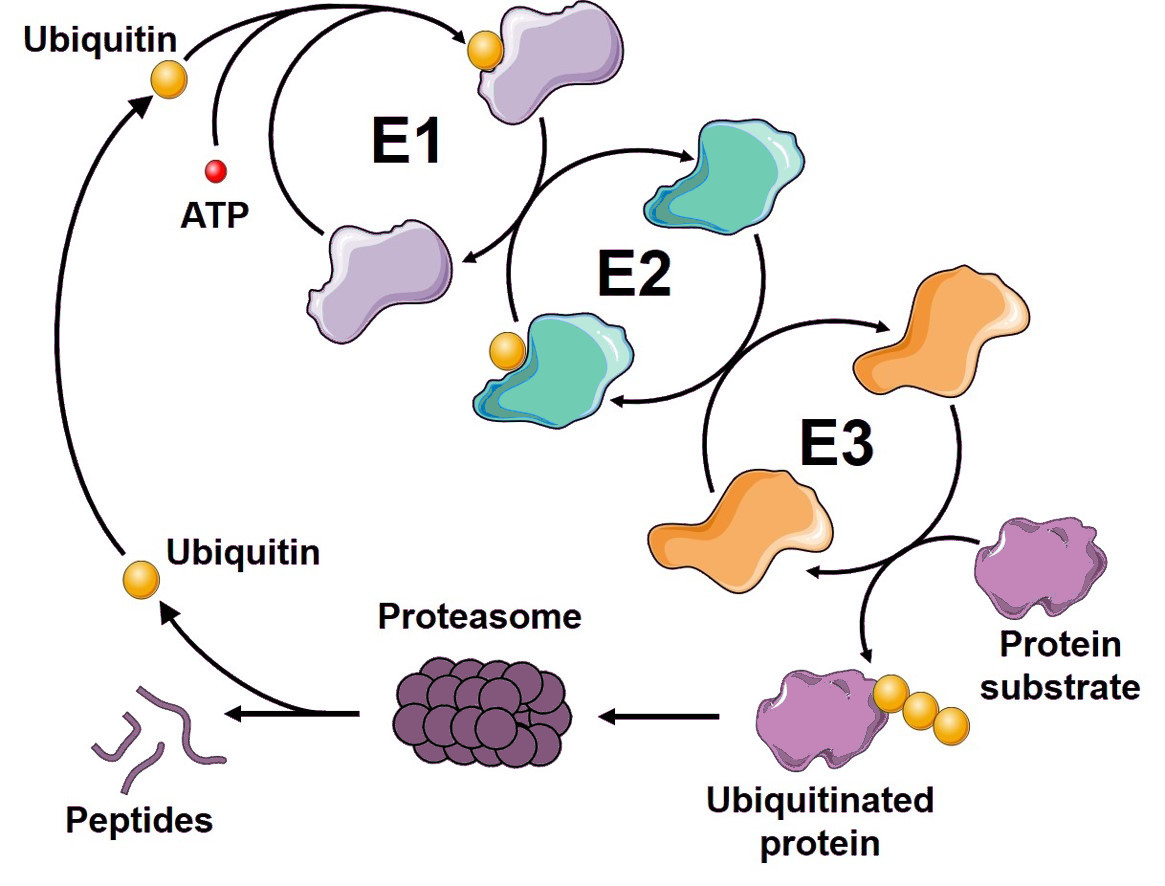

Energetics of Allosteric Communication in Ubiquitin Revealed by Hybrid MCTS-Langevin Simulations

computational biophysics, protein dynamics, Monte Carlo Tree Search, Langevin dynamics, OpenMM, molecular simulation, ubiquitin, allostery

Exploring protein conformational landscapes and identifying potential allosteric communication pathways remain significant challenges in computational biophysics. This study presents a hybrid computational approach combining Monte Carlo Tree Search (MCTS) with Langevin Dynamics (LD) simulations using the OpenMM toolkit to enhance conformational sampling.